Automated Solution of Problems in Mathematics and Sciences

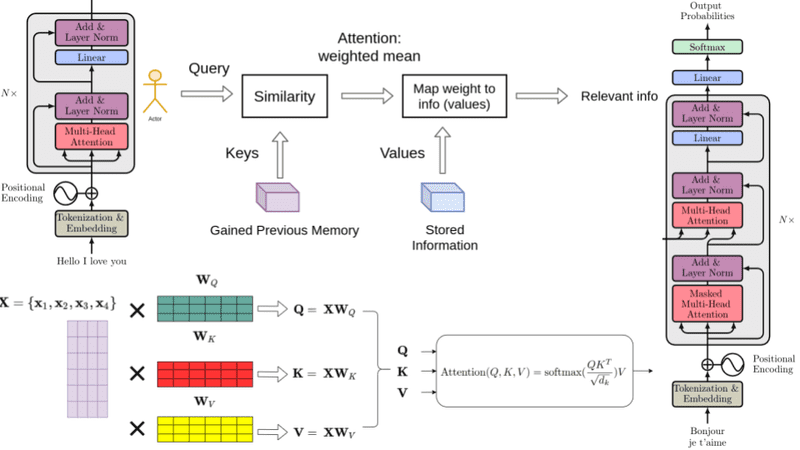

Deep neural networks have been very powerful hypothesis class, they now dominate much of image, video, speech and text analysis. One of the powerful networks has been Transformers. In this project, we study the tokenizations and modifications of transformer architectures for solving mathematics and science problems. Our initial results published in NeurIPS-W and SDM shows promising results, but also identifies pertinant issues in solving problems that involves non-linear operations or high precision floating point operations. We have also proposed SOTA datasets of questions answer in mathematics and sciences upto class 12th in Indian CBSE syllabus. This project can potentially lead to automating grading or tutoring tools.

Some sample questions and their solution predicted by our method are